Are you a software professional or not?

I'm not talking about having some kind of official certification here. I'm asking whether creating high quality code on a repeatable basis is your top priority.

Professionals do everything possible to write quality code. Are you and your organization doing everything possible to write quality code? Of course, whether you are a professional or not can only be answered by your peers.

If you are not doing software inspections then you are not doing everything possible to improve the quality of your code. Software inspections are not the same as code walk throughs, which are used to inform the rest of the team about what you have written and are used mainly for educational purposes. Walk throughs will find surface defects, but most walk throughs are not designed to find as many defects as possible.

How do defects get into the code? It's not like there are elves and goblins that come out at night and put defects into your code. If the defects are there it is because the team injected them. Many defects can be discovered and prevented before they cause problems for development. Defects are only identified when you go looking for them, and that is typically only in QA.

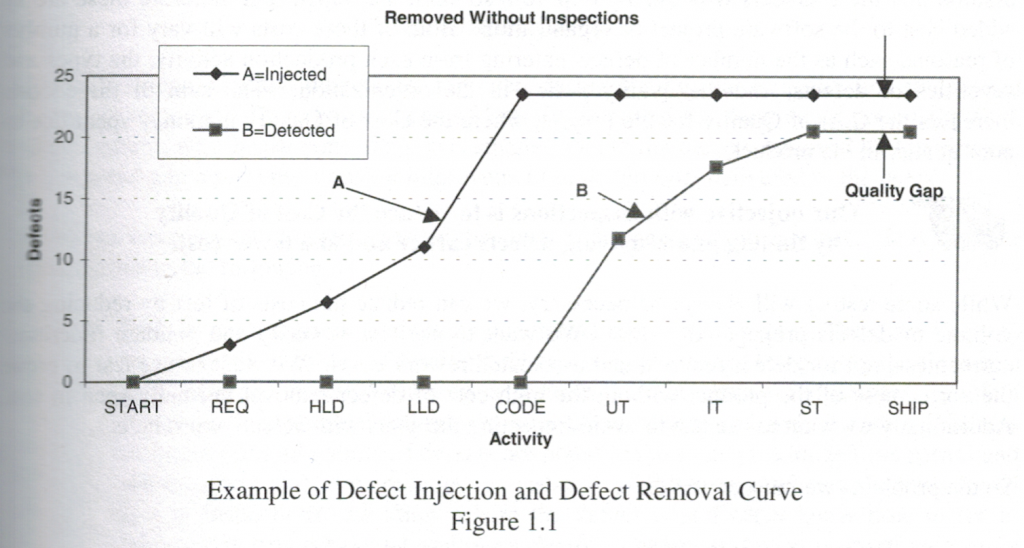

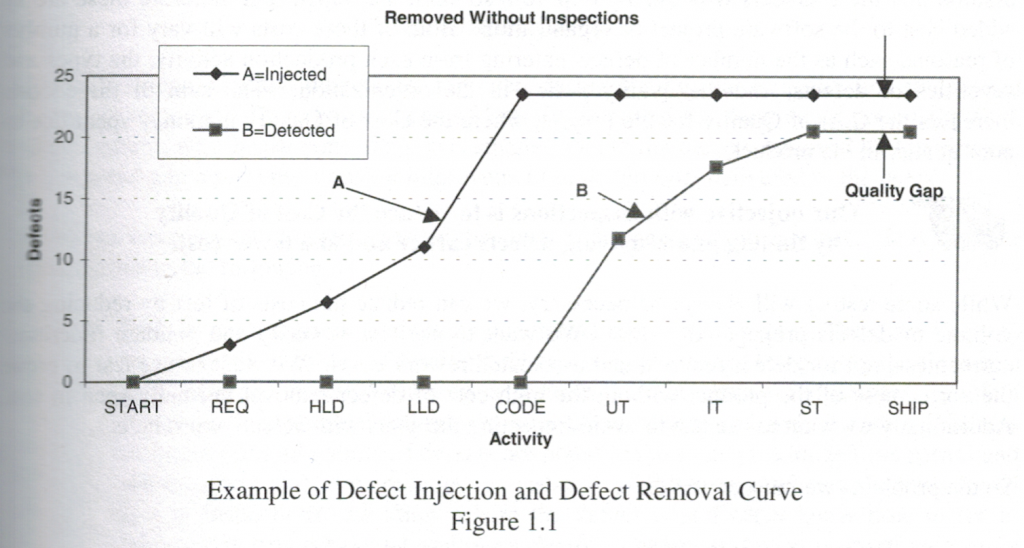

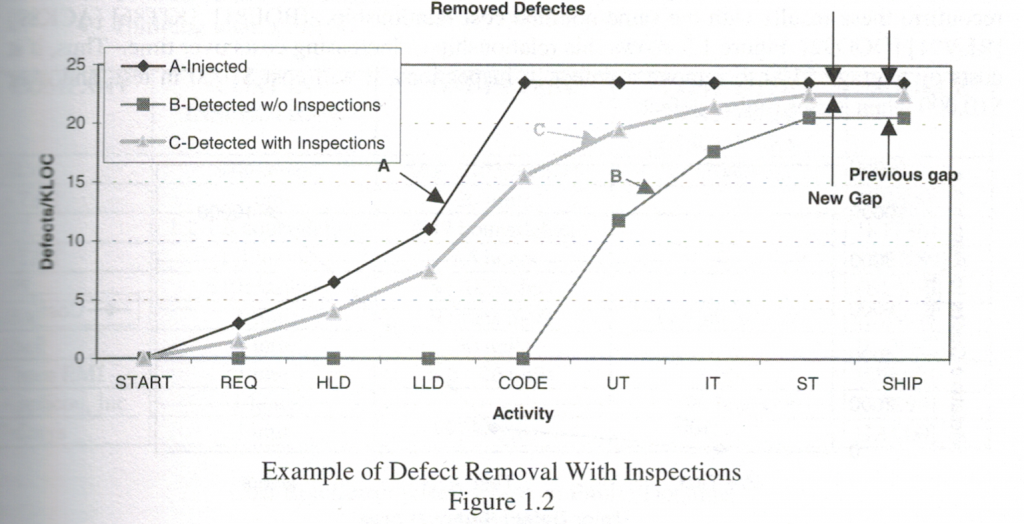

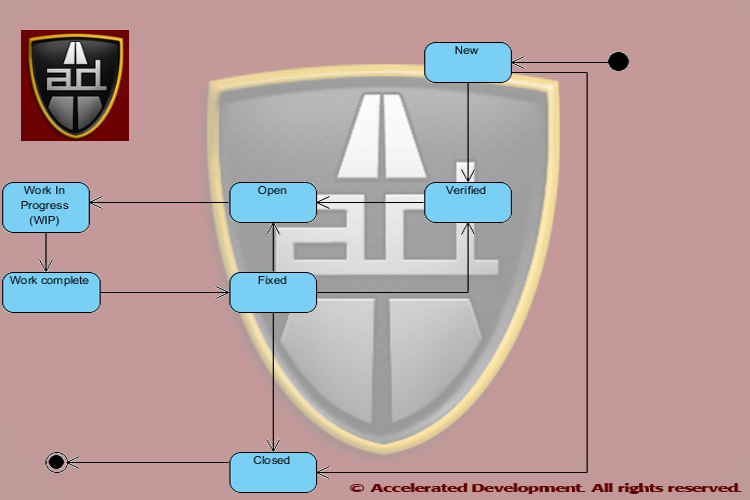

This diagram from Radice shows that defects will accumulate until testing begins. Your quality will be limited by the number of defects that you can find before you ship your software.

With inspections, you begin to inspect your artifacts (use cases, user stories, UML diagrams, code, test plans, etc) as they are produced. You attempt to eliminate defects before they have a chance to cascade and cause other phases of software development to create defects. For example, a defect during requirements or in the architecture can cause coding problems that are detected very late (see Inspections are not Optional).

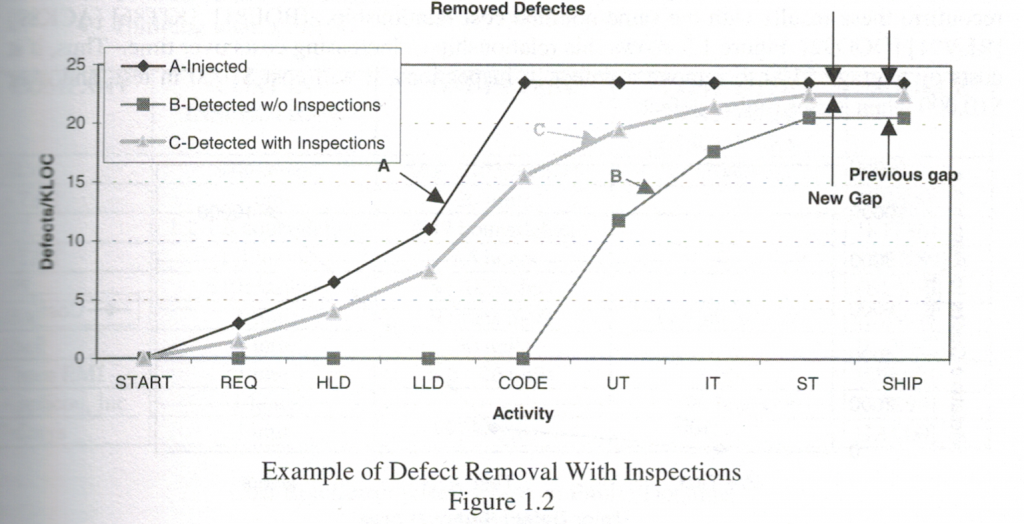

With inspections the defect injection and removal curve looks like this:

When effective inspections are mandatory, the quality gap shrinks and the quality of the software produced goes up dramatically. In the Economics of Software Quality, Capers Jones and Olivier Bonsignour show that defect removal rates rarely top 80% without inspections; but with inspections you can get to 97%.

The issue is that people know that they make mistakes but don't want to admit it, i.e. who wants to admit that they put the milk in the cupboard? They certainly don't want their peers to know about it!

Many defects in a software system are caused by ignorance, a lack of due diligence, or simply a lack of concentration. Most of these defects can be found by inspection, however, people feel embarrassed and exposed in inspections because simple problems become apparent.

For inspections to work, they must be conducted in a non-judgemental environment where the goal is to eliminate defects and improve quality. When inspections turn into witch hunts and/or the focus is on style rather than on substance then inspections will fail miserably and they will become a waste of time.

Professional software developers are concerned with high quality code. Finding out as soon as possible how you inject defects into code is the fastest way to learn how to prevent those defects in the future and become a better developer. Professionals are always asking themselves how they can become better, do you?

I'm not talking about having some kind of official certification here. I'm asking whether creating high quality code on a repeatable basis is your top priority.

Professionals do everything possible to write quality code. Are you and your organization doing everything possible to write quality code? Of course, whether you are a professional or not can only be answered by your peers.

If you are not doing software inspections then you are not doing everything possible to improve the quality of your code. Software inspections are not the same as code walk throughs, which are used to inform the rest of the team about what you have written and are used mainly for educational purposes. Walk throughs will find surface defects, but most walk throughs are not designed to find as many defects as possible.

How do defects get into the code? It's not like there are elves and goblins that come out at night and put defects into your code. If the defects are there it is because the team injected them. Many defects can be discovered and prevented before they cause problems for development. Defects are only identified when you go looking for them, and that is typically only in QA.

Benefits of Inspections

Inspections involve several people and require intense preparation before conducting the review. The purpose of inspections is to find defects and eliminate them as early as possible. Inspections apply to every artifact of software development:- Requirements (use cases, user stories)

- Design (high level and low level, UML diagrams)

- Code

- Test plans and cases

This diagram from Radice shows that defects will accumulate until testing begins. Your quality will be limited by the number of defects that you can find before you ship your software.

When effective inspections are mandatory, the quality gap shrinks and the quality of the software produced goes up dramatically. In the Economics of Software Quality, Capers Jones and Olivier Bonsignour show that defect removal rates rarely top 80% without inspections; but with inspections you can get to 97%.

Why Don't We Do Inspections?

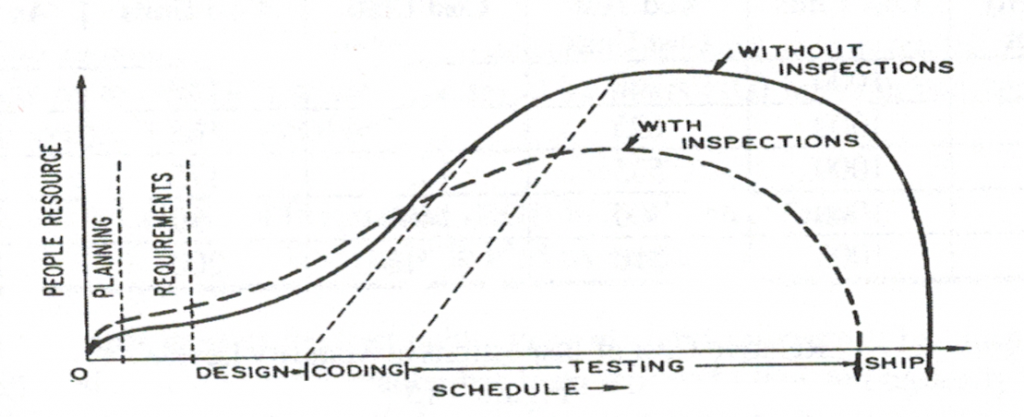

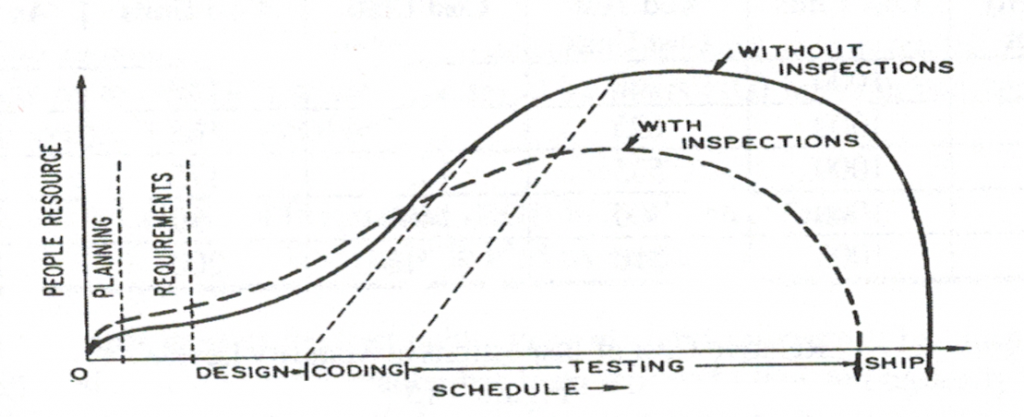

There is a mistaken belief that inspections waste time. Yet study after study shows that inspections will dramatically reduce the amount of time in quality assurance. There is no doubt that inspections require an up-front effort, but that up-front effort pays back with dividends. The hidden effect of inspections is as follows:

The issue is that people know that they make mistakes but don't want to admit it, i.e. who wants to admit that they put the milk in the cupboard? They certainly don't want their peers to know about it!

Many defects in a software system are caused by ignorance, a lack of due diligence, or simply a lack of concentration. Most of these defects can be found by inspection, however, people feel embarrassed and exposed in inspections because simple problems become apparent.

For inspections to work, they must be conducted in a non-judgemental environment where the goal is to eliminate defects and improve quality. When inspections turn into witch hunts and/or the focus is on style rather than on substance then inspections will fail miserably and they will become a waste of time.

Professional software developers are concerned with high quality code. Finding out as soon as possible how you inject defects into code is the fastest way to learn how to prevent those defects in the future and become a better developer. Professionals are always asking themselves how they can become better, do you?

Conclusion

Code inspections have been done for 40 years and offer conclusive proof that they greatly improve software quality without increasing cost or time for delivery. If you are not doing inspections then you are not producing the best quality software possibleBibliography

- Bonsignour, Olivier and Jones, Capers; The Economics of Software Quality; Addison Wesley, Reading, MA; 2011; ISBN 10: 0132582201.

- Fagan, M.E., Advances in Software Inspections, July 1986, IEEE Transactions on Software Engineering, Vol. SE-12, No. 7, Page 744-751

- Gilb, Tom and Graham, Dorothy; Software Inspections; Addison Wesley, Reading, MA; 1993; ISBN 10: 0201631814.

- Radice, Ronald A.; High Qualitiy Low Cost Software Inspections; Paradox icon Publishing Andover, MA; ISBN 0-9645913-1-6; 2002; 479 pages.

- Wiegers, Karl E.; Peer Reviews in Software – A Practical Guide; Addison Wesley Longman, Boston, MA; ISBN 0-201-73485-0; 2002; 232 pages.

The Agile manifesto supports principle 1 with the following statements:

The Agile manifesto supports principle 1 with the following statements:

Shorter development cycles where you adjust your aim is critical to building the right software system, especially if you are faced with technical or requirements uncertainty (see

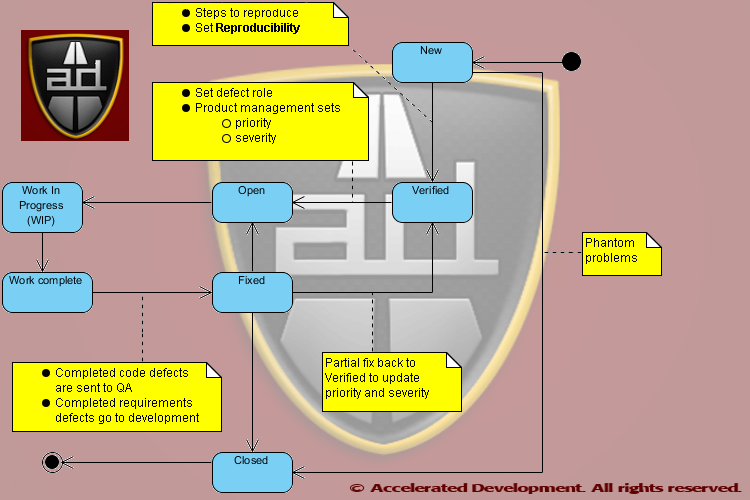

Shorter development cycles where you adjust your aim is critical to building the right software system, especially if you are faced with technical or requirements uncertainty (see  For example, in Scrum all the defects get put into the back log to be addressed at a future time. How often do people consider the source of the defect or whether it could have been avoided in the first place?

For example, in Scrum all the defects get put into the back log to be addressed at a future time. How often do people consider the source of the defect or whether it could have been avoided in the first place?

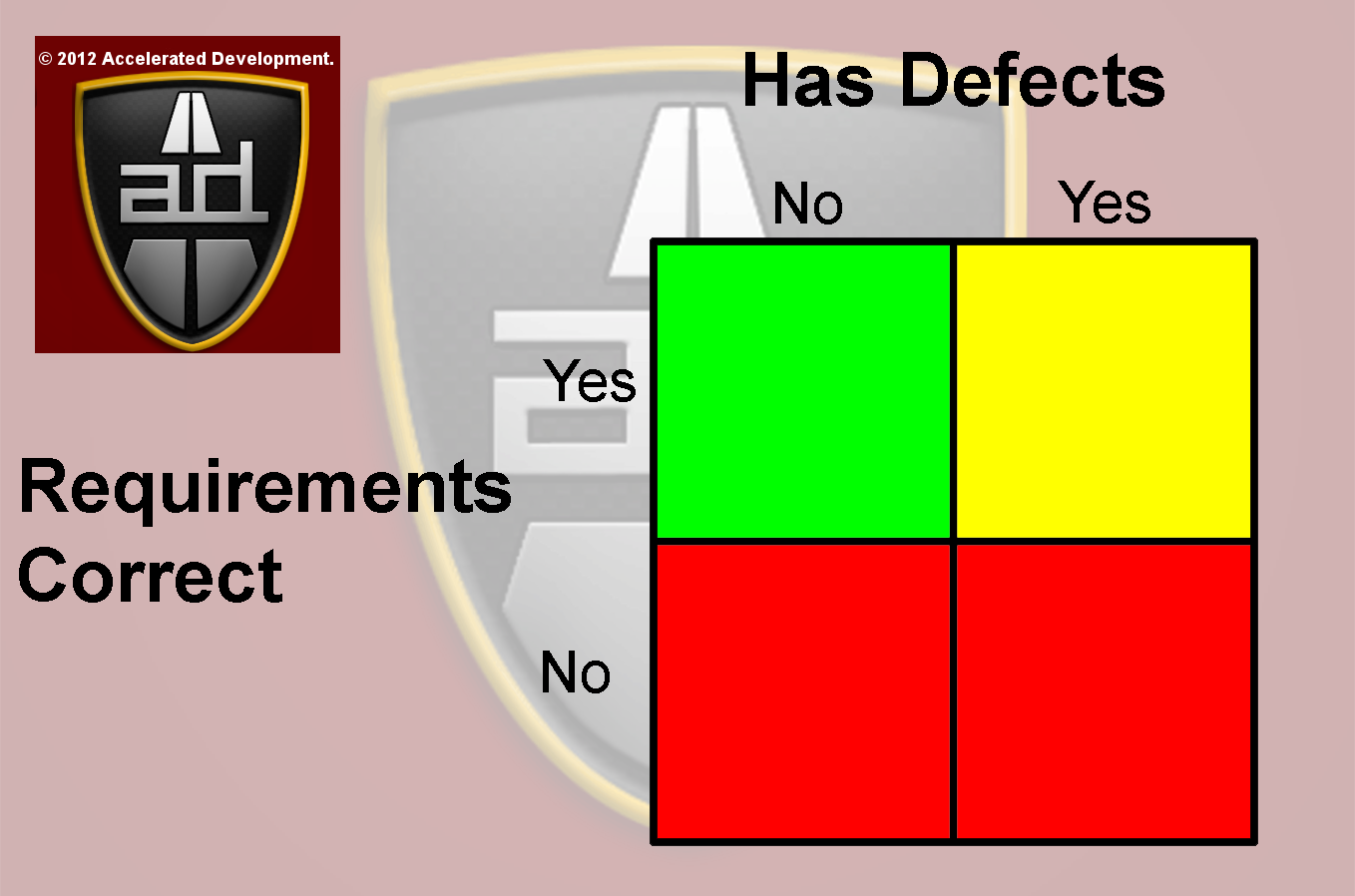

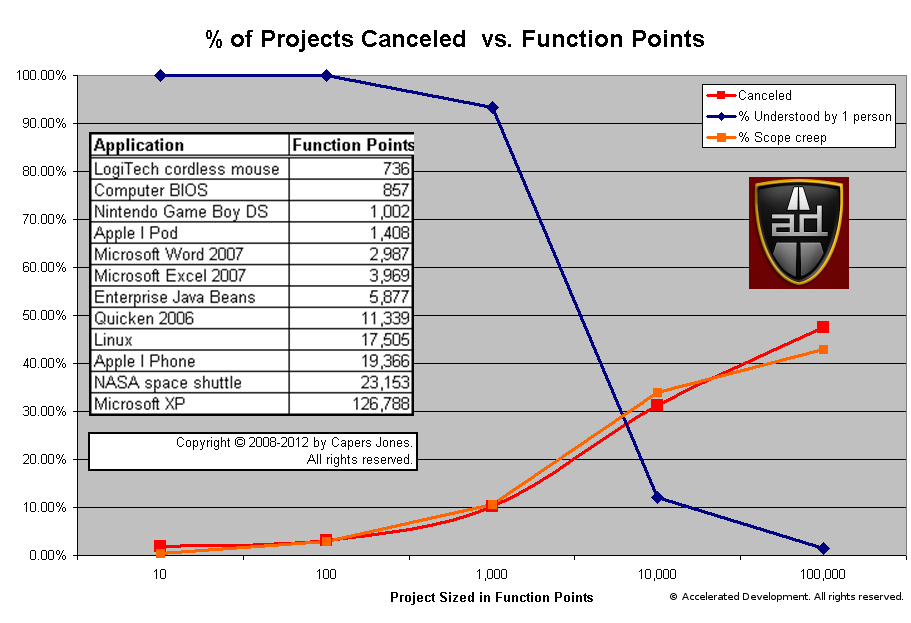

Analysis shows that the probability of a project being canceled is highly correlated with the amount of scope shift. Simply creating enhancements in the Bug Tracker hides this problem and does not help the team.

Analysis shows that the probability of a project being canceled is highly correlated with the amount of scope shift. Simply creating enhancements in the Bug Tracker hides this problem and does not help the team.